Process

Our project began with the selection of simulation as our project brief, prompting us to ponder the aspects of life that we want to experience but can't due to various reasons. Intrigued by the idea of experiencing the inaccessible, our focus naturally gravitated towards unveiling the hidden patterns in nature. Our team is interested in the exploration of the unseen patterns of flowers under UV lights, which is how the pollinator sees the flower and how the flower communicates to the pollinator by guiding it to the pollen through these intricate patterns.

Proposal, background

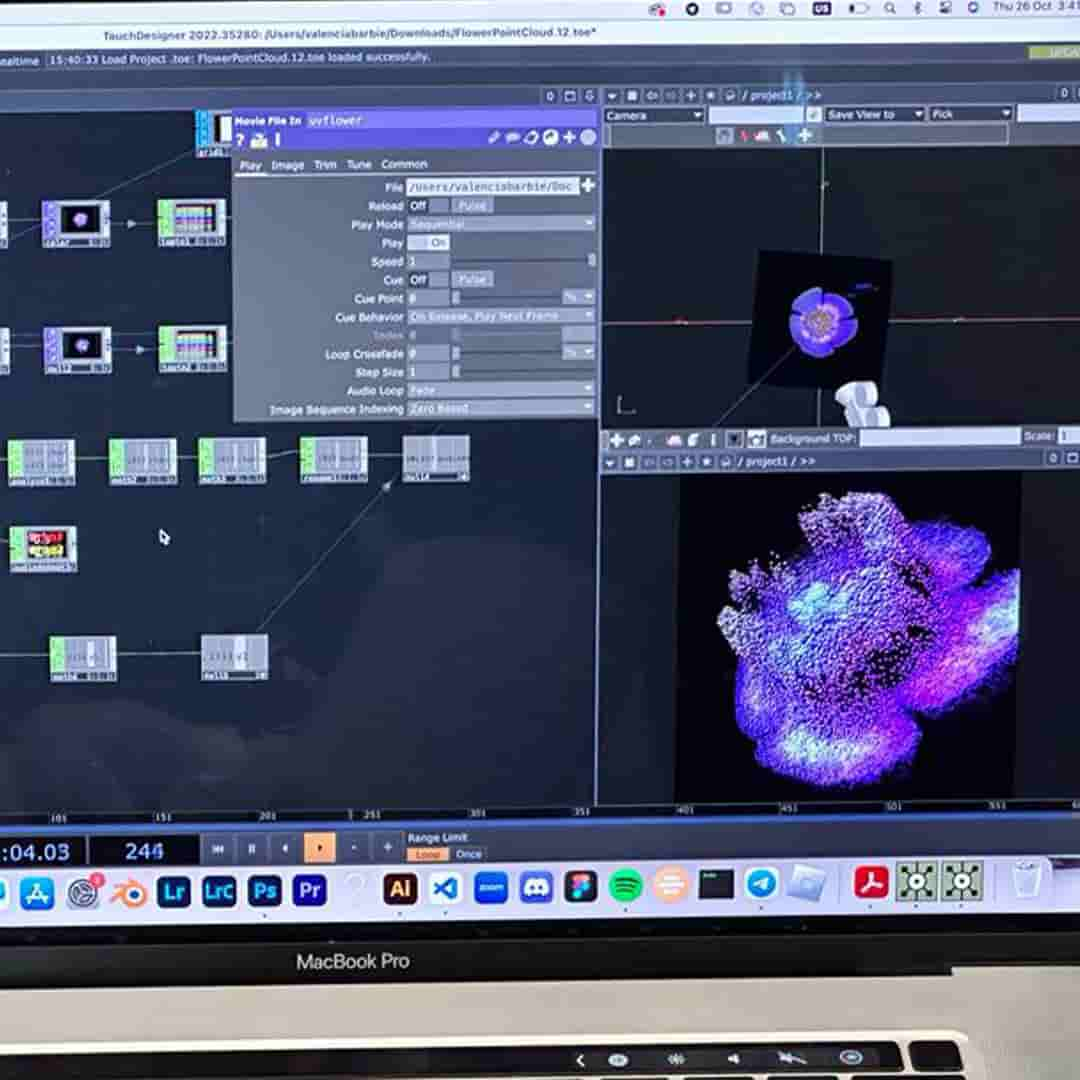

This concept revolves around entering the mystical realm of flowers in the ultraviolet (UV) spectrum, integrating visually captivating elements derived from our study of flowers under UV light. Capturing the creative potential of Uv light to unveil the entire spectrum of colours that are invisible to the human eye. The visuals, inspired by the colours and patterns observed, are coupled with sound waves generated by translating the shapes of flowers into auditory wavelengths. Projected onto a crafted flower structure made of wires, these visuals come alive in the dark, responding dynamically to the audio frequencies. Achieved using TouchDesigner/code, this audio-reactive visuals transforms the wire framework into a mesmerising canvas, where we reimagine flowers come to live in this UV saturated world through art and technology

Proposed objective

This project is a study of UV coloration on flowers in the UV space as our main focus. Our project seeks to humanise this interaction through visual and auditory means. In delving into the realm of UV coloration, our project becomes an effort to reimagine the unseen visible, exploring the hidden nature that goes unnoticed.

Our team will look into the different ways to capture the photographs of the flowers and the different kinds of software to aid us in composing the visuals as well as the audio generation.

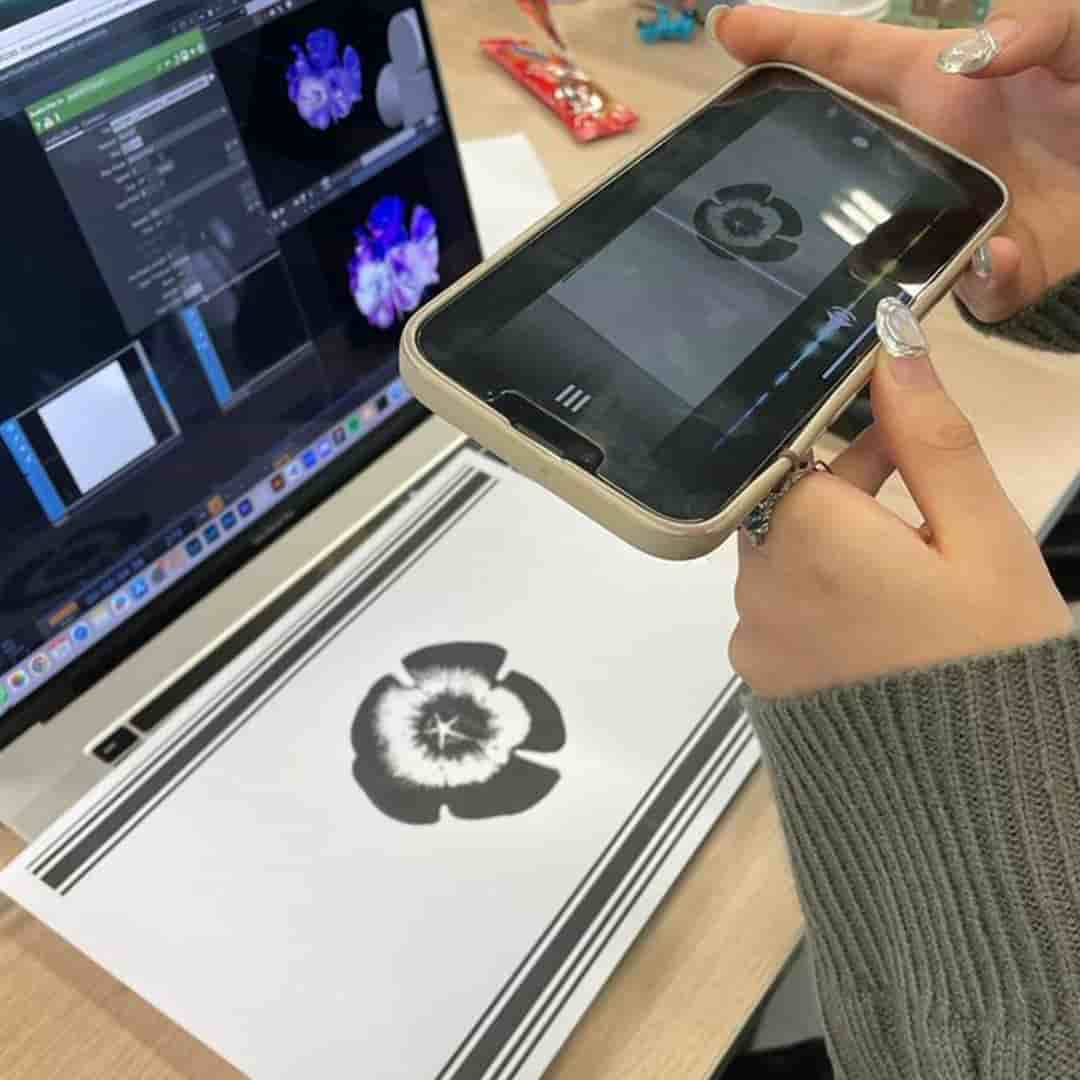

Proposed approach

The initial proposed approach was to use TouchDesigner as our main visual output generator and Phonopaper as our visual to audio generator. Using the functionality of the Phonopaper app, we will generate black and white images of the flowers we took under UV lighting in Photoshop before putting it onto the template provided.

Data collected

We picked out locations of where we wanted to pick the flowers for the photographs before gathering the flowers and shooting them with a makeshift black room studio. The flowers collected are from the categorization that we have set prior the gathering of the flowers.

The areas we have collected consist of 5 different categorization: Homegrown Plants, Roadside Plants, HDB Estate Plants, Tourism Plants and Local Gardens.

The reason for the categorization was to look into the different pupsoes of such plants.

The total amount types of flowers we have collected throughout the project consist of 30 different flowers of different locations. Some of which comes from the same area of vicinity.

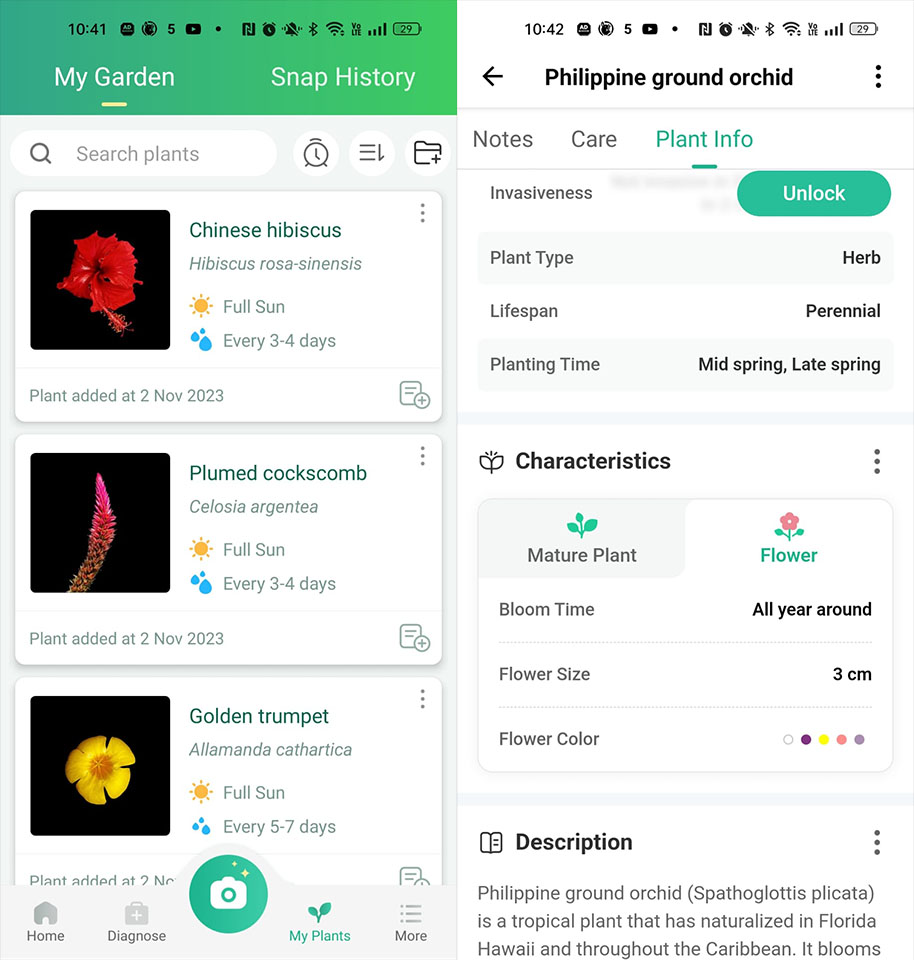

For more datas of the flowers, we used the Picturethis app to aid us in identifying the flowers that we have picked. In the app, it provides all the general informations about the flowers which we can use it for our design process.

Therefore, the one particular data that we used from the app was the size range of the flowers and using that data, it be translated into the digital visuals.

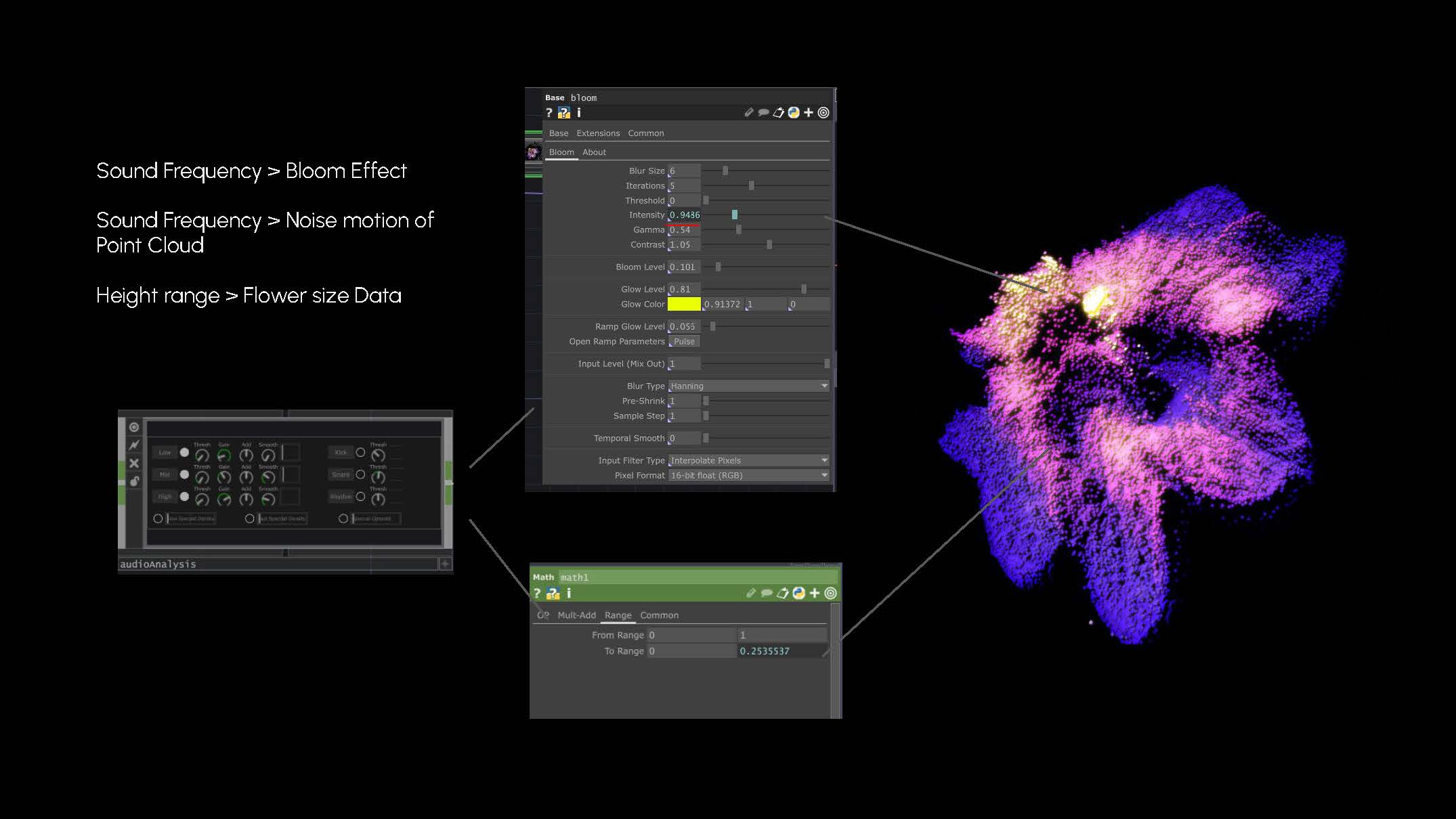

Digital Artefact

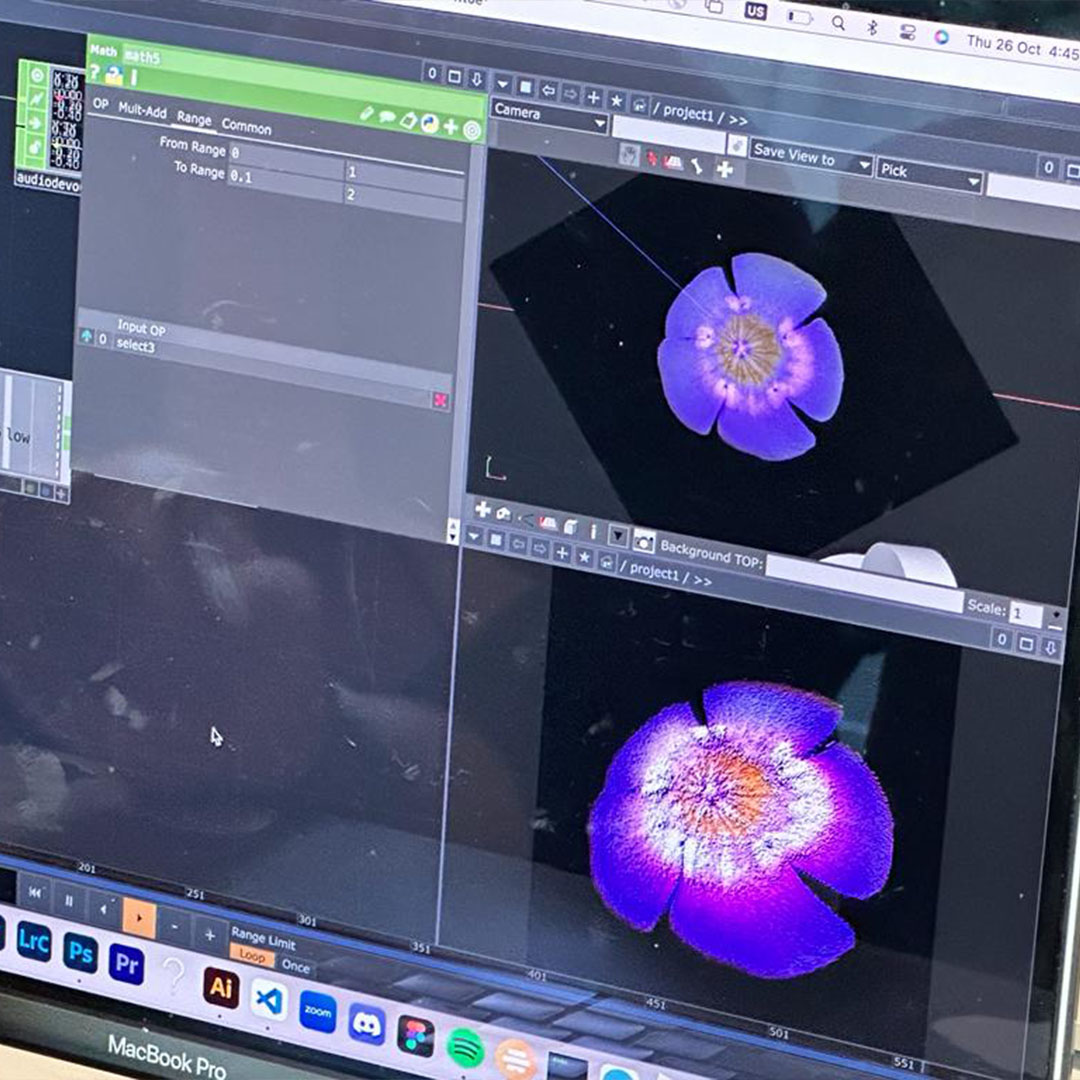

The digital artefact will be in a form of video format, where the audio will be recorded along with visuals. The visuals is a representation of how the flowers communicate to the pollinators using their colours under UV lightings and accompanied with the audio generated from the flower.

How the visuals is being generated is by having the frequency from the audio controlling the motion and bloom effect of the flower visuals. It is crucial to use the frequency of the audio as the concept of the project involves how the flowers communicate to the pollinators.

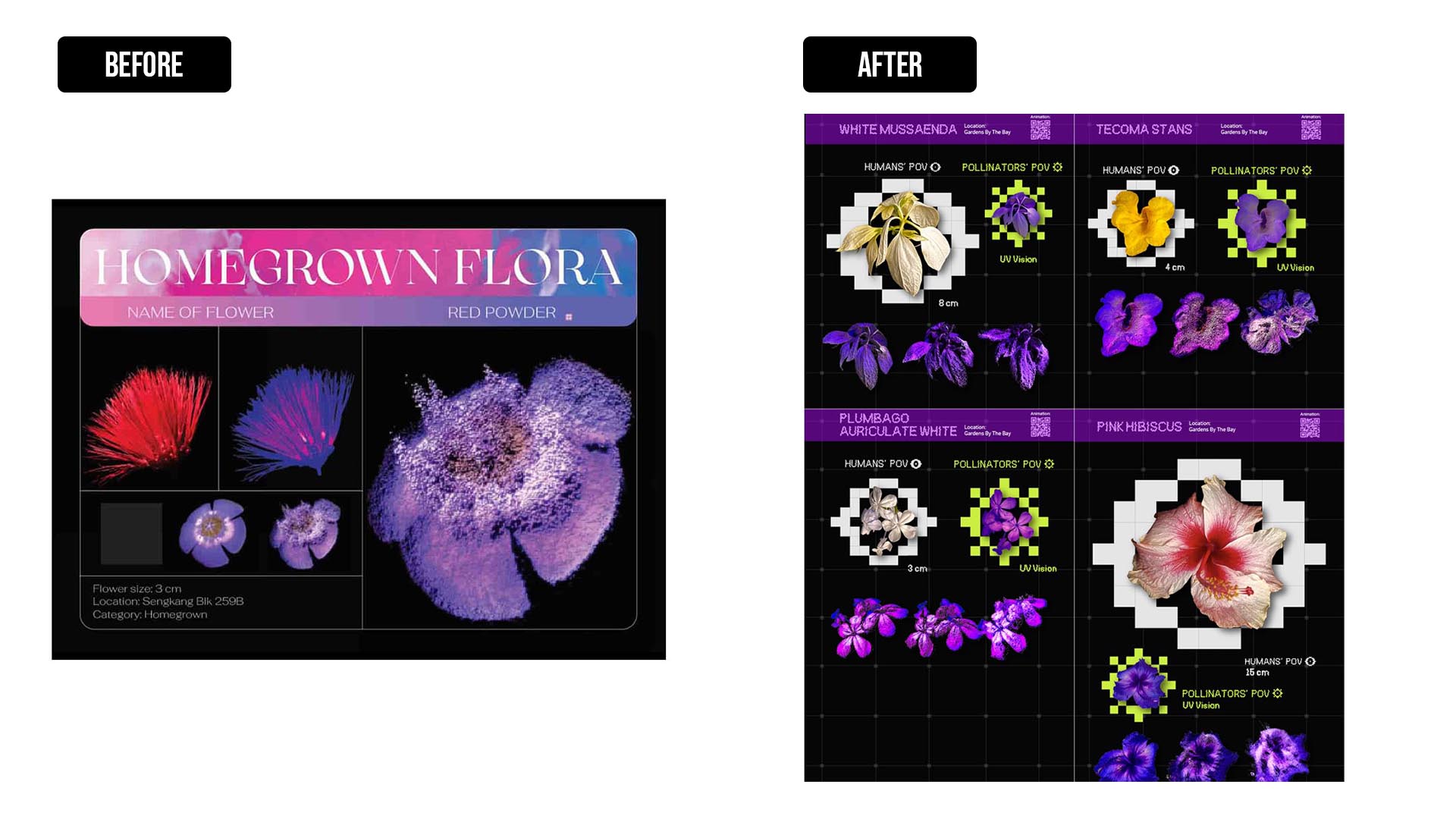

Physical Artefact

There were certain changes from the initial layout that we had for the physical artefact. Firstly, the flowers are to scale to the data we've collected and it consist of two different light settings. On the bottom it also shows the preview of how the visuals animate.

The reason for having the flowers to be of actual size was just so that the viewers can have a reference point to the real flowers. The animated visuals are there to ease the viewers from having the trouble to scan the QR code to preview the animation video, unless they are keen to view and hear the audio.

The physical artefact allows the audiences to choose what they want to look and the flowers that they may perhaps interested in, giving them options and not constricting them into scanning the QR codes.

Conclusion

In conclusion, what worked well was that we followed through the concept that we have set, which was to generate audio through imagery and reinterpreting the process of pollination. It has generated an interesting outcome which provides the visual and auditory experience. The photographs came out surprisingly well in comparison to the first attempts we had.

However, what we could have explored more was to how we can push the visuals in TouchDesigner. Currently the visuals does not vary as much and perhaps what we could have experimented was to how the motion varies between different flowers.